Another in my series of "Didja Know?" This might be old news to you, but I just found out that version 5 of the VMware vCenter Converter will fix virtual machine misalignment! So what is misalignment and why should you care? That's a blog all in its own, but basically it's when the guest file system doesn't match the block boundaries of the storage system. Okay, so what? The problem is data gets split between storage blocks and you get partial reads and writes to many more blocks than you would need if the storage and OS were aligned. This can result in a lot more work on your storage device than is necessary. For those that don't know, this is much more common than you realize and just until recently OS's had alignment issues with many storage vendors. Here's a really good paper that explains misalignment in great detail!

There are a lot of tools to correct misalignment and the best practice is to fix it before you even put a machine into production. Today I'm going to show how VMware vCenter Converter can fix alignment. VMware vCenter Converter is normally a tool to convert physical machines into virtual machines, but I was delighted to hear that they added this feature!

Below I'm using the NetApp Virtual Storage Console to clone a Windows XP machine. The VSC tells me the machine is misaligned and if I'd like to proceed with the clone? If I proceed, I will carry over the misalignment to the clone. VSC has tools built in to correct misalignment as well, but that's a demonstration for another day.

So let's fix the problem! I run the VMware Converter and tell it that I want to Convert a machine.

Select where the misaligned machine lives and the machine itself.

Enter the credentials of the vCenter you'd like the converted machine to go to.

Give the new machine a name and where it should be located.

Select the datastore the machine should live on and what virtual machine version you'd like.

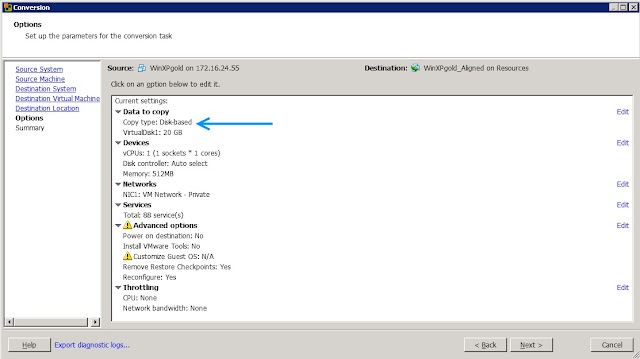

Click on "Data to copy".

Here's where things change! Choose, "Select volumes to copy".

Ensure the "Create optimized partition layout" is checked. This will correct the misalignment on the clone.

Once the convert is completed use the VSC to check for misalignment. Here I do a "Create Rapid Clones". This time you can see the converted virtual machine past the misalignment scan because it is now aligned!

I hope you enjoyed this "Didja Know" and that it will help keep your environment misalignment free!

Until Next Time!