Friday, December 21, 2012

Thursday, December 20, 2012

Clustered ONTAP Explained Through Brains

Hi All,

A loyal follower of mine asked if I would put more pictures into my blogs, so here goes. I have this thing for brains, so I figured I would explain Clustered ONTAP through the use of brains.

Warning - Do NOT Try This At Home

Say you were a mad scientist and you began splicing brains together. In my example you only have two brains so far. Even though each brain came from a single person, each half has different functions and thoughts that are linked together through the corpus callosum, which allows both halves to communicate. In your crazed genius you were able to bridge two brains together with another special corpus callosum, so now all four halves can communicate to one another. Now we have two separate brains hooked up together in a cluster. Each half has it's own functions, but those functions can pass to different halves, or to a different brain all together!

There, you have Clustered ONTAP! Can't see it yet? Each half of brain is a node in the cluster which is designed to perform functions or volumes in the case of Clustered ONTAP. Each half or node can communicate and take over functions of the other half or it's partner, hooked together through an HA interconnect. The two brains are hooked together through a clustered network running 10 gigabit. In my example both brains are in a single Vserver, so they can share each others functions, but they could be set to not pass workloads to each other if put you them in separate Vservers or jars.

Take a look at my picture. The brain on the left represents me, and you can see I have two functions in mind. In Clustered ONTAP these would be volumes where data is being stored. So when my better half kicks me in the butt in the morning and tells me it's time to go to work, that function or volume passes from her brain to mine. So now I'm processing that volume too. When I tell her it's time to have fun and pass over the play video games volume and she'll start to play video games.

I hope you enjoyed my explanation of Clustered ONTAP through the use of brains.

Until Next Time!

A loyal follower of mine asked if I would put more pictures into my blogs, so here goes. I have this thing for brains, so I figured I would explain Clustered ONTAP through the use of brains.

Warning - Do NOT Try This At Home

Say you were a mad scientist and you began splicing brains together. In my example you only have two brains so far. Even though each brain came from a single person, each half has different functions and thoughts that are linked together through the corpus callosum, which allows both halves to communicate. In your crazed genius you were able to bridge two brains together with another special corpus callosum, so now all four halves can communicate to one another. Now we have two separate brains hooked up together in a cluster. Each half has it's own functions, but those functions can pass to different halves, or to a different brain all together!

There, you have Clustered ONTAP! Can't see it yet? Each half of brain is a node in the cluster which is designed to perform functions or volumes in the case of Clustered ONTAP. Each half or node can communicate and take over functions of the other half or it's partner, hooked together through an HA interconnect. The two brains are hooked together through a clustered network running 10 gigabit. In my example both brains are in a single Vserver, so they can share each others functions, but they could be set to not pass workloads to each other if put you them in separate Vservers or jars.

Take a look at my picture. The brain on the left represents me, and you can see I have two functions in mind. In Clustered ONTAP these would be volumes where data is being stored. So when my better half kicks me in the butt in the morning and tells me it's time to go to work, that function or volume passes from her brain to mine. So now I'm processing that volume too. When I tell her it's time to have fun and pass over the play video games volume and she'll start to play video games.

I hope you enjoyed my explanation of Clustered ONTAP through the use of brains.

Until Next Time!

Wednesday, December 19, 2012

NVRAM - Our Catalyst Friend

Hi Folks,

This will be a quicky, but I felt it deserved it's own post. I get a lot of questions regarding the purpose of NVRAM, it's a bit of an enigma, so I thought I'd clarify it functionality.

Here's what it is NOT:

1. Performance Acceleration Card

2. Read Cache

3. Write Cache

3. Used to calculate parity

4. Used by WAFL to map where data should be placed on disk

NVRAM's is a short term transaction log and it enables RAM to coalesce writes to minimize head spin and writes to disk. While those writes are in RAM, they are mirrored to NVRAM. All of the goodness and logic built into ONTAP and WAFL are made possible due to the insurance NVRAM gives to it. If the power goes out, all of those writes in RAM are gone, so NVRAM steps in and flushes all of it's contents to disk since it has a battery backup. This way nothing is lost! So NVRAM doesn't make writes more efficient (that's somebody else's job), it's there to catch data if there's a power outage.

Until Next Time!

This will be a quicky, but I felt it deserved it's own post. I get a lot of questions regarding the purpose of NVRAM, it's a bit of an enigma, so I thought I'd clarify it functionality.

Here's what it is NOT:

1. Performance Acceleration Card

2. Read Cache

3. Write Cache

3. Used to calculate parity

4. Used by WAFL to map where data should be placed on disk

NVRAM's is a short term transaction log and it enables RAM to coalesce writes to minimize head spin and writes to disk. While those writes are in RAM, they are mirrored to NVRAM. All of the goodness and logic built into ONTAP and WAFL are made possible due to the insurance NVRAM gives to it. If the power goes out, all of those writes in RAM are gone, so NVRAM steps in and flushes all of it's contents to disk since it has a battery backup. This way nothing is lost! So NVRAM doesn't make writes more efficient (that's somebody else's job), it's there to catch data if there's a power outage.

Until Next Time!

Monday, December 17, 2012

Why Clustered ONTAP for Virtual Desktops - Ponce de Leon Would Have Loved it!

Hi All,

For those of you that have been NetApp fans for awhile I'm sure you've heard of Clustered ONTAP. It's had a variety of names, over the years, GX, Cluster-Mode, C-Mode, but essentially what you were seeing is the evolution of a new butt kicking product!

What's one of the hardest things we have to face with technology? We've all been hit by it, upgrading! Technology moves so fast that by the time you buy anything, it's already outdated. Replacing the hardware sucks, but migrating your data sucks worse! How about patching? That's an administrator's nightmare! As painless as vendors try to make patching it almost always requires downtime, and it's inevitable that something doesn't come back up.

With Clustered ONTAP, no longer do you need to halt production to add new hardware, to patch or migrate data! How is this possible? Let's take a look at our friend Data ONTAP 7-Mode and vFilers. So what's a vFiler? basically, a virtual filer inside of a filer. And like a hypervisor, you can have multiple vFilers within a single filer. Take that power and capability increase it and you've got vServers in Clustered ONTAP. With Clustered ONTAP, everything is dealt with at the vServer layer. So what? Remember when we didn't have Flexvols and how cool it was when you got them? Yeah, it's like that.

In general, vServers can span multiple heads and aggregates and what that gives you is the ability to move stuff on the fly. Move stuff on the fly you say? So when I need to do maintenance, I can just migrate volumes to another node and keep production running? Why yes, yes you can! You can move volumes, with no downtime, upgrade, replace, service a node and your users will be none the wiser. In essence, your cluster is now immortal! Muahahaha! The cool thing is you can have high end and low end nodes in your cluster for both dev/test and production all in one.

So what's the big deal about virtual desktops and Clustered ONTAP? All of the coolness I've stated above, PLUS, say you have your desktops separated out by department. When crunch time hits and users need more power, you can move those users to a faster node! What if a node crashes? Migrate the users and their data to another node without them knowing. Or say you're a service provider and have multiple companies living on your cluster. You don't want them interacting and vServers can do just that. Completely different volumes, networking, etc. Plus, you can give administrative rights of a vServer to each group, while still being the master of the cluster.

Until Next Time

For those of you that have been NetApp fans for awhile I'm sure you've heard of Clustered ONTAP. It's had a variety of names, over the years, GX, Cluster-Mode, C-Mode, but essentially what you were seeing is the evolution of a new butt kicking product!

What's one of the hardest things we have to face with technology? We've all been hit by it, upgrading! Technology moves so fast that by the time you buy anything, it's already outdated. Replacing the hardware sucks, but migrating your data sucks worse! How about patching? That's an administrator's nightmare! As painless as vendors try to make patching it almost always requires downtime, and it's inevitable that something doesn't come back up.

With Clustered ONTAP, no longer do you need to halt production to add new hardware, to patch or migrate data! How is this possible? Let's take a look at our friend Data ONTAP 7-Mode and vFilers. So what's a vFiler? basically, a virtual filer inside of a filer. And like a hypervisor, you can have multiple vFilers within a single filer. Take that power and capability increase it and you've got vServers in Clustered ONTAP. With Clustered ONTAP, everything is dealt with at the vServer layer. So what? Remember when we didn't have Flexvols and how cool it was when you got them? Yeah, it's like that.

In general, vServers can span multiple heads and aggregates and what that gives you is the ability to move stuff on the fly. Move stuff on the fly you say? So when I need to do maintenance, I can just migrate volumes to another node and keep production running? Why yes, yes you can! You can move volumes, with no downtime, upgrade, replace, service a node and your users will be none the wiser. In essence, your cluster is now immortal! Muahahaha! The cool thing is you can have high end and low end nodes in your cluster for both dev/test and production all in one.

So what's the big deal about virtual desktops and Clustered ONTAP? All of the coolness I've stated above, PLUS, say you have your desktops separated out by department. When crunch time hits and users need more power, you can move those users to a faster node! What if a node crashes? Migrate the users and their data to another node without them knowing. Or say you're a service provider and have multiple companies living on your cluster. You don't want them interacting and vServers can do just that. Completely different volumes, networking, etc. Plus, you can give administrative rights of a vServer to each group, while still being the master of the cluster.

Until Next Time

Friday, December 14, 2012

Citrix VDI with PvDisk and NetApp Best Practices - Part III Restore

Hi All,

Here it is, the long awaited completion of the Backup and Recovery saga. As before, remember to try this in your development environment and not in production! Use at your own RISK!

One of your users calls you up and tells you that they had all their data on their PvDisk and they accidentally erased some of it. Now, before you go rushing off and restore the whole thing, we've got a few questions we need to ask!

1. Has the user added new data to his/her PvDisk since the last backup?

2. If yes, can they save it to another location before you do the restore?

3. If no, the restore will take more time, can they wait? (I'll explain)

With the NetApp VSC you can restore the entire virtual machines, individual disks, or individual files. I'll walk you through these.

The easiest is to restore an entire machine.

1. Log into Virtual Center, find the machine, right click it, select NetApp > Backup and Recovery > Restore

2. Next select the snapshot/backup you'd like to restore.

3. Choose the entire virtual machine and Restart VM. This will overwrite the entire virtual machine and roll back all changes to when the snapshot/backup was taken. Be careful with this because any changes made after the backup will be GONE!

4. Once you're happy with the choices you've made, review the summary and finish the restore.

The virtual machine will now be restored! Now if you only want to restore certain datastores, you would go back to VM Component Selection and select only the datastore you wanted to restore.

Now what if you wanted individual files? Here you would right click the machine, select NetApp > Backup and Recovery > Mount

This is where things get really cool! Notice in the screen shot you select the snapshot/backup you want and it also has listed all the virtual machines in that backup. Since all of those machines are in the volume and the magic happens at the volume level, you can restore any files from any of those machines. So what happens next? After you mount this datastore there are a number of things you can do. It's given a unique identifier to not confuse ESXi that there are duplicate datastores mounted. You can now browse the datastore, for VMware files or edit the settings of the original desktop or ANY desktop and mount up the backed up VMDK as a new hard drive on the desktop! How cool is that?! I love this feature!! Grab the files your user needs and than remove the hard drive and un-mount the temporary datastore.

There's more you can do, but this is a quick glimpse of the restore power of VSC. I hope the wait of this blog was worth it, and if not, well than too bad. :-)

Until Next Time!

Here it is, the long awaited completion of the Backup and Recovery saga. As before, remember to try this in your development environment and not in production! Use at your own RISK!

One of your users calls you up and tells you that they had all their data on their PvDisk and they accidentally erased some of it. Now, before you go rushing off and restore the whole thing, we've got a few questions we need to ask!

1. Has the user added new data to his/her PvDisk since the last backup?

2. If yes, can they save it to another location before you do the restore?

3. If no, the restore will take more time, can they wait? (I'll explain)

With the NetApp VSC you can restore the entire virtual machines, individual disks, or individual files. I'll walk you through these.

The easiest is to restore an entire machine.

1. Log into Virtual Center, find the machine, right click it, select NetApp > Backup and Recovery > Restore

2. Next select the snapshot/backup you'd like to restore.

3. Choose the entire virtual machine and Restart VM. This will overwrite the entire virtual machine and roll back all changes to when the snapshot/backup was taken. Be careful with this because any changes made after the backup will be GONE!

4. Once you're happy with the choices you've made, review the summary and finish the restore.

The virtual machine will now be restored! Now if you only want to restore certain datastores, you would go back to VM Component Selection and select only the datastore you wanted to restore.

Now what if you wanted individual files? Here you would right click the machine, select NetApp > Backup and Recovery > Mount

This is where things get really cool! Notice in the screen shot you select the snapshot/backup you want and it also has listed all the virtual machines in that backup. Since all of those machines are in the volume and the magic happens at the volume level, you can restore any files from any of those machines. So what happens next? After you mount this datastore there are a number of things you can do. It's given a unique identifier to not confuse ESXi that there are duplicate datastores mounted. You can now browse the datastore, for VMware files or edit the settings of the original desktop or ANY desktop and mount up the backed up VMDK as a new hard drive on the desktop! How cool is that?! I love this feature!! Grab the files your user needs and than remove the hard drive and un-mount the temporary datastore.

There's more you can do, but this is a quick glimpse of the restore power of VSC. I hope the wait of this blog was worth it, and if not, well than too bad. :-)

Until Next Time!

Tuesday, December 11, 2012

Get Neil in Your Email!

Hi All,

Not sure if you've noticed, but I added a cool subscribe widget on the right hand side of the blog. So if you'd like to get my blogs emailed to you directly enter your email and click subscribe! I don't think you'll get any spam. I signed up to see if it worked and don't think I've gotten any spam yet, or maybe if goes to my spam folder. :-)

Many thanks to the folks that have already subscribed, I hope to continue putting out quality blogs that you'll enjoy!!

Not sure if you've noticed, but I added a cool subscribe widget on the right hand side of the blog. So if you'd like to get my blogs emailed to you directly enter your email and click subscribe! I don't think you'll get any spam. I signed up to see if it worked and don't think I've gotten any spam yet, or maybe if goes to my spam folder. :-)

Many thanks to the folks that have already subscribed, I hope to continue putting out quality blogs that you'll enjoy!!

Sharefile - Sharing is Caring

Hi All,

I'm going to change gears a bit here and talk about something new! Don't worry, I haven't abandoned virtual desktops, just expanding into new waters. Today I'm going to talk to you about Citrix Sharefile on NetApp storage.

Haven't heard of Sharefile yet? How about Dropbox? It's a very cool technology, and it allows users to share their files in the cloud so you can share with friends, co-workers, other companies, etc. Ever need to send a Word document or Power Point that's larger than 10 megs? Many times our email systems have been programed to not allow this size of files through. 10 megs might not sound like a lot of data, but multiply that time the thousands of other users that might be sending large file and you can quickly over load an infrastructure. Also, I can save my files in the cloud, and can access it on any device!

I stand by my original assessment that users are whacky and will do things they're not supposed to, like post company data on a third party application out in the cloud. The cloud let's us do remarkable things, the problem is, it lets us do remarkable things. Think about it.... Where is your data, is it secure, who's downloading it, who's viewing it, who's benefiting from it? Maybe your competition? In a perfect world, we could trust and there wouldn't be bad people. Unfortunately we don't live in that world and there are people who will take advantage.

So what's a company to do? Users demand Dropbox capability, but as an administrator you need to secure that data....

<<Curtain Please>>Now introducing Sharefile! <<Screams from The Audience>> Sharefile will give your users the ability to post their files into the cloud, but let security administrators sleep at night. Citrix allows you to store your data on-premise, on Citrix managed storage or a combination of the two. Being employed by a storage company, I'm going to suggest the on-premise suggestion. :-) For more than obvious reasons, I like the on-premise because my user's data is in house. Since the data is on-premise, I can de-dupe, compress and keep an eye on it. Think of it as "your" cloud!

A lot more to talk about in later blogs!

Until Next Time

I'm going to change gears a bit here and talk about something new! Don't worry, I haven't abandoned virtual desktops, just expanding into new waters. Today I'm going to talk to you about Citrix Sharefile on NetApp storage.

Haven't heard of Sharefile yet? How about Dropbox? It's a very cool technology, and it allows users to share their files in the cloud so you can share with friends, co-workers, other companies, etc. Ever need to send a Word document or Power Point that's larger than 10 megs? Many times our email systems have been programed to not allow this size of files through. 10 megs might not sound like a lot of data, but multiply that time the thousands of other users that might be sending large file and you can quickly over load an infrastructure. Also, I can save my files in the cloud, and can access it on any device!

I stand by my original assessment that users are whacky and will do things they're not supposed to, like post company data on a third party application out in the cloud. The cloud let's us do remarkable things, the problem is, it lets us do remarkable things. Think about it.... Where is your data, is it secure, who's downloading it, who's viewing it, who's benefiting from it? Maybe your competition? In a perfect world, we could trust and there wouldn't be bad people. Unfortunately we don't live in that world and there are people who will take advantage.

So what's a company to do? Users demand Dropbox capability, but as an administrator you need to secure that data....

<<Curtain Please>>Now introducing Sharefile! <<Screams from The Audience>> Sharefile will give your users the ability to post their files into the cloud, but let security administrators sleep at night. Citrix allows you to store your data on-premise, on Citrix managed storage or a combination of the two. Being employed by a storage company, I'm going to suggest the on-premise suggestion. :-) For more than obvious reasons, I like the on-premise because my user's data is in house. Since the data is on-premise, I can de-dupe, compress and keep an eye on it. Think of it as "your" cloud!

A lot more to talk about in later blogs!

Until Next Time

Friday, December 7, 2012

Let's Share a WAFL

Hi All,

Today I'd like to talk to you about WAFL.

No, not waffles, the NetApp Write Anywhere File Layout. I'm often asked about NetApp controllers write performance and if it can do RAID 1+0 or RAID 5, etc, so I felt it would be handy to discuss a bit about WAFL and how NetApp uses RAID for data resiliency. I've been focusing a lot on PVS and for those that know PVS, you know how write intensive it is. That's where WAFL comes in.

NetApp is one of those companies that did things differently, they built a new idea from the ground up and it really shows when you start to investigate how data is written to disk. Random writes are probably some of the most expensive operations because the platters have to spin up, the heads have to find the data, etc. etc. Instead of doing things the traditional way, a NetApp controller will hold that data in memory and wait until it has a bunch more blocks to write to disk. Without going into a lot of technobable, at an optimal time all that randomness is coalesced and written to disk, avoiding multiple spin ups. The coolness factor is just beginning. The blocks can be written anywhere on disk because the OS has a map of where the free space is, hence speeding up writes even more. Even cooler still, blocks don't have to overwrite previous blocks at the same location, you guessed it, speeding up writes even more!

But Neil, there's all that data in memory, what happens if the power goes out? Ah, I'm glad you asked! We have a card built into the controllers called NVRAM with memory and a battery. It's job is to mirror what's in volatile memory and copy it to disk if the lights go out.

So, back to RAID. NetApp uses RAID 4 and RAID DP (basically RAID 4 with a second parity drive for resiliency). But Neil, aren't there better technologies that that?! Ah, glad you asked that too! See, if NetApp didn't do things differently than yes, I'd agree with you, but with the WAFL intelligence built into the box, RAID is just a way to protect the data once it's actually on physical disk. So you see, you get RAID 1+0 resiliency but at a much lower cost!

So what you ask? Well, in your PVS environment that's 90% writes, you're got a storage platform that was created with writes in mind! This is a brief and watered down explanation and if there's interest I'll go into more detail, but I wanted to share some of the cool factor at the core of NetApp that often gets over looked.

Yes yes, I know, I still owe you an article on restoring PvDisk. I just got my brain back from holiday, give me a break. :-)

Until Next Time!

Today I'd like to talk to you about WAFL.

No, not waffles, the NetApp Write Anywhere File Layout. I'm often asked about NetApp controllers write performance and if it can do RAID 1+0 or RAID 5, etc, so I felt it would be handy to discuss a bit about WAFL and how NetApp uses RAID for data resiliency. I've been focusing a lot on PVS and for those that know PVS, you know how write intensive it is. That's where WAFL comes in.

NetApp is one of those companies that did things differently, they built a new idea from the ground up and it really shows when you start to investigate how data is written to disk. Random writes are probably some of the most expensive operations because the platters have to spin up, the heads have to find the data, etc. etc. Instead of doing things the traditional way, a NetApp controller will hold that data in memory and wait until it has a bunch more blocks to write to disk. Without going into a lot of technobable, at an optimal time all that randomness is coalesced and written to disk, avoiding multiple spin ups. The coolness factor is just beginning. The blocks can be written anywhere on disk because the OS has a map of where the free space is, hence speeding up writes even more. Even cooler still, blocks don't have to overwrite previous blocks at the same location, you guessed it, speeding up writes even more!

But Neil, there's all that data in memory, what happens if the power goes out? Ah, I'm glad you asked! We have a card built into the controllers called NVRAM with memory and a battery. It's job is to mirror what's in volatile memory and copy it to disk if the lights go out.

So, back to RAID. NetApp uses RAID 4 and RAID DP (basically RAID 4 with a second parity drive for resiliency). But Neil, aren't there better technologies that that?! Ah, glad you asked that too! See, if NetApp didn't do things differently than yes, I'd agree with you, but with the WAFL intelligence built into the box, RAID is just a way to protect the data once it's actually on physical disk. So you see, you get RAID 1+0 resiliency but at a much lower cost!

So what you ask? Well, in your PVS environment that's 90% writes, you're got a storage platform that was created with writes in mind! This is a brief and watered down explanation and if there's interest I'll go into more detail, but I wanted to share some of the cool factor at the core of NetApp that often gets over looked.

Yes yes, I know, I still owe you an article on restoring PvDisk. I just got my brain back from holiday, give me a break. :-)

Until Next Time!

I'm Back!

Hi Guys and Gals,

I'm back! It's been a tough battle, but I got my brain to come back from holiday. I'd like to give a shout out to my mentor's blog:

http://rachelzhu.me/

For those that know Rachel Zhu, you know how super smart she is and knows Citrix and VDI like the back of her hand. She's writing a great multi-part XenDesktop best practice on storage series. I encourage you to take a look! We've been heavily testing XenDesktop on clustered ONTAP and she has some great insights.

All for now!

I'm back! It's been a tough battle, but I got my brain to come back from holiday. I'd like to give a shout out to my mentor's blog:

http://rachelzhu.me/

For those that know Rachel Zhu, you know how super smart she is and knows Citrix and VDI like the back of her hand. She's writing a great multi-part XenDesktop best practice on storage series. I encourage you to take a look! We've been heavily testing XenDesktop on clustered ONTAP and she has some great insights.

All for now!

Tuesday, November 27, 2012

Monday, November 26, 2012

Citrix VDI with PvDisk and NetApp Best Practices - Part II.5 Site Replication

Hi All,

After I wrote the previous blog I realized I left out site replication for your VDI environment. I briefly mentioned that VSC for vSphere can kick off a SnapMirror between sites, but that isn't much help. Site recovery is a huge topic and I could go on for days, so I'll just talk about some basics. Rachel, myself and Trevor Mansell, from Citrix worked on a site replication and recovery paper, TR-3931. It uses Citrix XenDesktop, PVS, XenServer, NetScaler and NetApp SnapMirror, so it might not fit the technologies you're using in your environment, but there's a ton of great disaster recovery (DR) concepts that can be applied to the architecture of your DR scenario.

For example, you need to get your data to another site. In the past this was extremely painful using file based copying technologies which can be slow and usually require dedicated or extra bandwidth between sites. With NetApp SnapMirror, the data is transferred at the block level, only deltas are transferred and if you've deduped on your source side, you don't have to re-inflate the data, send it over and dedupe once again. This is a HUGE benefit!

So what do you copy over? This is going to depend on what you're failover site looks like, but my rule would be whatever you need to make your life easier without utilizing too much storage. DR is hard enough, make it as easy as you can and mirror everything you think you need, you can always tweak things after you've tested a failover.

In the paper we have a pair of active/active sites, so we send Infrastructure and User Data, but leave the Write Cache and Virtual Machine. We leave the vDisk because we have a working copy of it at the other site. If you're architecture is active/passive, I'd copy over Infrastructure, User Data and Virtual Machine. Since the Write Cache is transient data, no need to copy it over.

After I wrote the previous blog I realized I left out site replication for your VDI environment. I briefly mentioned that VSC for vSphere can kick off a SnapMirror between sites, but that isn't much help. Site recovery is a huge topic and I could go on for days, so I'll just talk about some basics. Rachel, myself and Trevor Mansell, from Citrix worked on a site replication and recovery paper, TR-3931. It uses Citrix XenDesktop, PVS, XenServer, NetScaler and NetApp SnapMirror, so it might not fit the technologies you're using in your environment, but there's a ton of great disaster recovery (DR) concepts that can be applied to the architecture of your DR scenario.

For example, you need to get your data to another site. In the past this was extremely painful using file based copying technologies which can be slow and usually require dedicated or extra bandwidth between sites. With NetApp SnapMirror, the data is transferred at the block level, only deltas are transferred and if you've deduped on your source side, you don't have to re-inflate the data, send it over and dedupe once again. This is a HUGE benefit!

So what do you copy over? This is going to depend on what you're failover site looks like, but my rule would be whatever you need to make your life easier without utilizing too much storage. DR is hard enough, make it as easy as you can and mirror everything you think you need, you can always tweak things after you've tested a failover.

In the paper we have a pair of active/active sites, so we send Infrastructure and User Data, but leave the Write Cache and Virtual Machine. We leave the vDisk because we have a working copy of it at the other site. If you're architecture is active/passive, I'd copy over Infrastructure, User Data and Virtual Machine. Since the Write Cache is transient data, no need to copy it over.

The real fun begins at orchestration and that can be done with a variety of tools. In our paper we used the Citrix NetScaler, but if you're using vSphere, take a look at VMware Site Recovery Manager. (SRM). Or maybe you've used custom scripts? If you have and don't mind sharing them, please comment and include them, I'd love to take a look at them and it could save someone else a ton of time!

Bellow is an image from our paper that shows two active/active sites utilizing asynchronous SnapMirror to copy over required data for a failover.

As always, I hope this blog was helpful and look forward to Part III - Restore!

Tuesday, November 20, 2012

Citrix VDI with PvDisk and NetApp Best Practices - Part II

Hi All,

With Tiny Rider's help, here's part II and in today's blog I'm going to discuss how to backup your PvDisk in a VMware environment using the NetApp Virtual Storage Console (VSC) tool. I'll write a separate blog regarding XenServer, so today we'll focus on VMware as the hypervisor. I've probably said this before, but if you haven't seen or tried the VSC tool yet, you must download it and give it a try!

Please don't try this procedure on production desktops, try it on some test ones, and get comfortable with the technology and process before using it on production data. In other words, use at your own risk!

Now that we've got the warnings out of the way, let's back up some data! VSC allows you to backup data in various ways. You can backup a datastore, a single machine or multiple virtual machines in a datastore. For VDI I suggest you backup multiple machines from within the datastore. Why? In my opinion, this is the easiest way to backup multiple virtual machines at the same time with little interaction on your part.

How you backup and recover your data is going to depend on how you architected your virtual machine storage in XenDesktop at the host level. In my example my Virtual machine storage is on a datastore called writecache1 and my Personal vDisk storage has been separated and placed on a datastore called PvDisk.

Log into your vCenter server and click on the Home icon at the top of your screen.

Once there, under Solutions and Applications click the NetApp icon.

Click on the Backup and Recovery tab on the left side and click on the Backup link. Click the Add link in the upper right hand corner. From here you can do a lot of cool stuff. You can backup individual machines, multiple machines or entire datastores.

1. Give your backup a name and a description if you choose.

2. VSC can also kick off a SnapMirror if you already have a relationship set up between two volumes.

3. If you want you can perform a VMware consistency snapshot before the NetApp snapshot. I choose not to, which will make the VM crash consistent.

4. VERY important! If you have put your PvDisk on a different datastore from your virtual machine storage, click on Include datastores with independent disks. If you don't, your PvDisk will NOT be backed up and any customizations saved to it will be GONE! Click Next to continue.

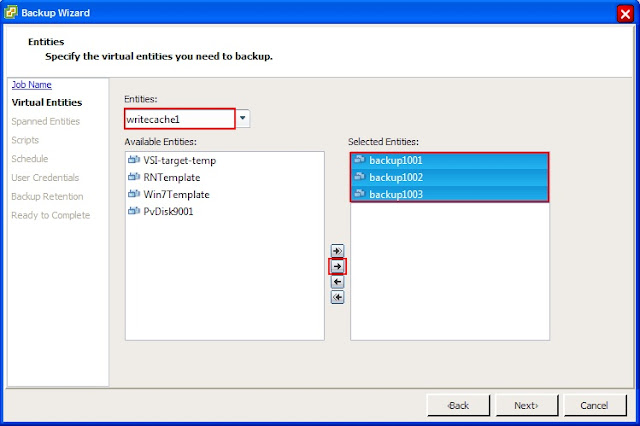

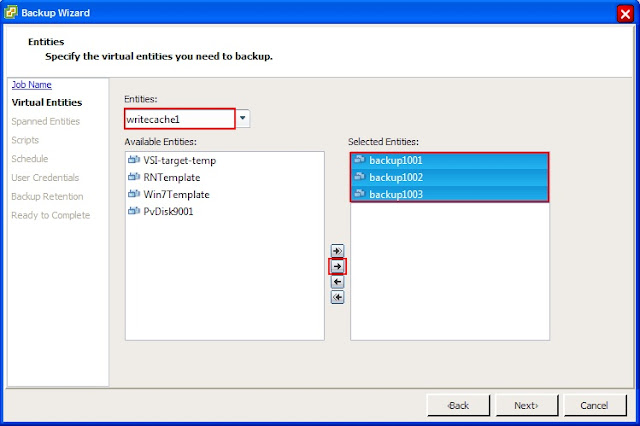

5. Select the Entities you want backed up. Here I choose writecache1 because this is the datastore my virtual machine storage is in and where my virtual machines are. Yours will be different and you can get this from the host configuration in XenDesktop.

6. Next select the virtual machines you want to backup and click the arrow to select them. Click Next to continue.

7. You should now see the datastores where your virtual machines live. Here I have PvDisk for my PvDisks and writecache1 which is everything else. Click Next.

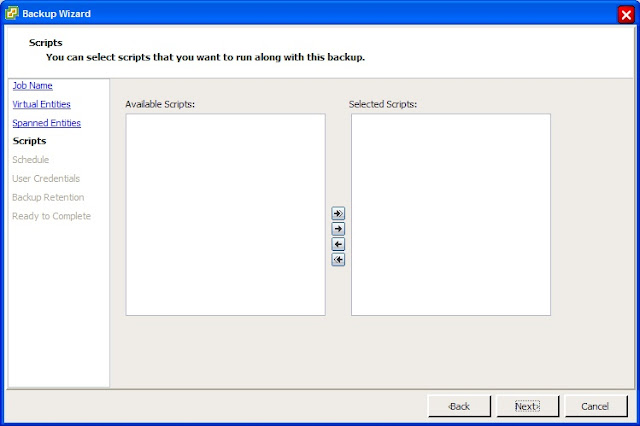

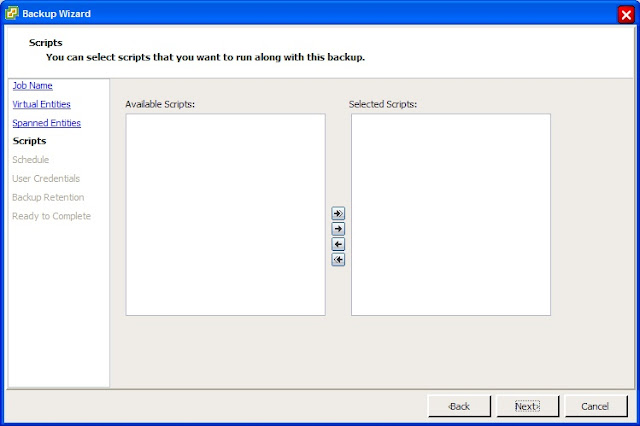

8. Here you can run any custom scripts you've created. Click Next to continue.

9. Now create a schedule for the backup. This will very on your needs. Here I will select One time only because I only want a single backup at this time. Click Next to continue.

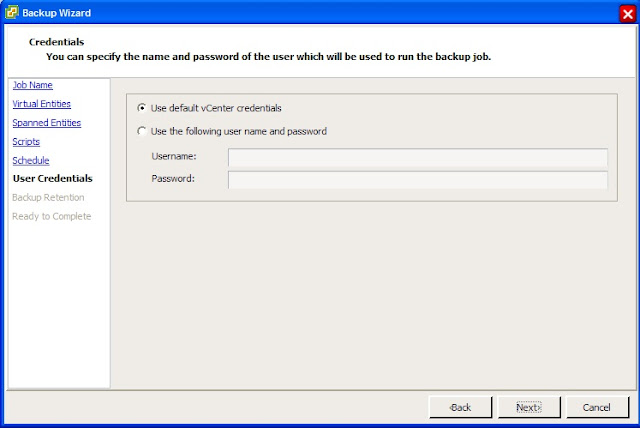

10. Enter the vCenter credentials you'd like VSC to use. Click Next.

11. Here we set the retention of the backup as well as email alerts regarding the backups. This will very greatly depending on your requirements. Click Next.

12. Review the backup job created and click Finish when you are satisfied. To run this job you can either check the Run Job Now or right click and run the job after it's been created.

Depending on the what you've selected for backup times, your VDI desktops will be backed up along with the PvDisk information. Look for restoring in Part III.

Until Next Time!

With Tiny Rider's help, here's part II and in today's blog I'm going to discuss how to backup your PvDisk in a VMware environment using the NetApp Virtual Storage Console (VSC) tool. I'll write a separate blog regarding XenServer, so today we'll focus on VMware as the hypervisor. I've probably said this before, but if you haven't seen or tried the VSC tool yet, you must download it and give it a try!

Please don't try this procedure on production desktops, try it on some test ones, and get comfortable with the technology and process before using it on production data. In other words, use at your own risk!

Now that we've got the warnings out of the way, let's back up some data! VSC allows you to backup data in various ways. You can backup a datastore, a single machine or multiple virtual machines in a datastore. For VDI I suggest you backup multiple machines from within the datastore. Why? In my opinion, this is the easiest way to backup multiple virtual machines at the same time with little interaction on your part.

How you backup and recover your data is going to depend on how you architected your virtual machine storage in XenDesktop at the host level. In my example my Virtual machine storage is on a datastore called writecache1 and my Personal vDisk storage has been separated and placed on a datastore called PvDisk.

Log into your vCenter server and click on the Home icon at the top of your screen.

Once there, under Solutions and Applications click the NetApp icon.

Click on the Backup and Recovery tab on the left side and click on the Backup link. Click the Add link in the upper right hand corner. From here you can do a lot of cool stuff. You can backup individual machines, multiple machines or entire datastores.

1. Give your backup a name and a description if you choose.

2. VSC can also kick off a SnapMirror if you already have a relationship set up between two volumes.

3. If you want you can perform a VMware consistency snapshot before the NetApp snapshot. I choose not to, which will make the VM crash consistent.

4. VERY important! If you have put your PvDisk on a different datastore from your virtual machine storage, click on Include datastores with independent disks. If you don't, your PvDisk will NOT be backed up and any customizations saved to it will be GONE! Click Next to continue.

5. Select the Entities you want backed up. Here I choose writecache1 because this is the datastore my virtual machine storage is in and where my virtual machines are. Yours will be different and you can get this from the host configuration in XenDesktop.

6. Next select the virtual machines you want to backup and click the arrow to select them. Click Next to continue.

7. You should now see the datastores where your virtual machines live. Here I have PvDisk for my PvDisks and writecache1 which is everything else. Click Next.

8. Here you can run any custom scripts you've created. Click Next to continue.

9. Now create a schedule for the backup. This will very on your needs. Here I will select One time only because I only want a single backup at this time. Click Next to continue.

10. Enter the vCenter credentials you'd like VSC to use. Click Next.

11. Here we set the retention of the backup as well as email alerts regarding the backups. This will very greatly depending on your requirements. Click Next.

12. Review the backup job created and click Finish when you are satisfied. To run this job you can either check the Run Job Now or right click and run the job after it's been created.

Depending on the what you've selected for backup times, your VDI desktops will be backed up along with the PvDisk information. Look for restoring in Part III.

Until Next Time!

Friday, November 16, 2012

The Continuing Adventures of Tiny Rider!

Tiny Rider heard I was falling behind on Part II and Part III of my blog, so he decided to help. I took this photo when he wasn't looking!

Citrix on NetApp Best Practices in Table Form!

Hi Everyone,

It's been a really busy week and I've only be able to do a little work on Part II, so I'm posting some best practices in easy to follow table form! Part II and Part III are coming soon! What we have here are different Citrix VDI components and best practices on how they interact with NetApp.

It's been a really busy week and I've only be able to do a little work on Part II, so I'm posting some best practices in easy to follow table form! Part II and Part III are coming soon! What we have here are different Citrix VDI components and best practices on how they interact with NetApp.

XenDesktop on vSphere

|

|

Storage Protocol

|

Backup Method

|

Compression / Thin Provision / Deduplication

|

|

Write Cache

|

NFS

|

N/A

|

Thin Provision

|

|

vDisk

|

CIFS

|

NetApp Snapshot

|

Thin Provision / Deduplication

|

|

Personal vDisk

|

NFS

|

Thin Provision / Deduplication

|

|

|

User Data

|

CIFS

|

NetApp Snapshot

|

Thin Provision / Deduplication / Compression

|

XenDesktop on XenServer

|

|

Storage Protocol

|

Backup Method

|

Compression / Thin Provision / Deduplication

|

|

Write Cache

|

NFS

|

N/A

|

Thin Provision

|

|

vDisk

|

CIFS

|

NetApp Snapshot

|

Thin Provision / Deduplication

|

|

Personal vDisk

|

NFS

|

Thin Provision / Deduplication

|

|

|

User Data

|

CIFS

|

NetApp Snapshot

|

Thin Provision / Deduplication / Compression

|

Friday, November 9, 2012

The Adventures of Tiny Rider!

Here we have a picture of my wife and I at the Arc de Triomphe. As I was taking the picture, the elusive Tiny Rider popped into the photo and quickly ran off!

Thursday, November 8, 2012

Citrix VDI with PvDisk and NetApp Best Practices - Part I

Hi All,

Welcome to a three part series regarding Citrix Streamed Desktops with Personal vDisk and NetApp best practices. Part I will consist of storage design considerations and best practices when creating steamed desktops with PvDisk. Part II will jump to best practices on backing up your new environment and Part III will discuss recovering individual files to a site failure.

From my previous blog post, you can tell I really like technologies like PvDisk, I feel they are what were missing in VDI implementations for folks that needed more persistency, but great VDI products are just one side of the coin. To make your new environment even better you need a good architecture and great storage. We all know that user's are a tricky bunch, if they feel their new environment is ANY slower or more problematic than what they currently have, they will complain and make your life very difficult. I can't promise your new VDI environment will be perfect, but I'll provide you with some of our latest storage best practices to help make it that much better!

Let's talk vDisk. In PVS, this is the long term memory of your VDI implementation. Everything your gold image is will be contained on your vDisk. Until recently NetApp recommended putting your vDisk on block storage, but with our support of SMB 2.1 we recommend putting it on a CIFS share. I've written about this in a previous blog so I won't spend much time on it. Go CIFS!

Write Cache is the short term memory of your VM. Anything the OS needs to do while it's running is stored on the write cache. We recommend putting the write cache on NFS and thin provision it. If you think about it, probably one of the largest consumers of storage will be your write cache. If you assign 10 gigs to each virtual machine, and multiply that times the number of virtual machines in your environment, you can see how quickly this can become a huge number! Thus the beauty of NFS and thin provisioning. Assign all of your desktops to the same write cache datastore or storage repository and they will only use what they need out of the shared bucket. Since it's all transient data, don't waste CPU cycles on deduplication or compression.

Hmmm, if vDisk is long term memory and Write Cache is short term, I guess your user profile will be your gray matter. :-) This gives your desktop it's personality. There are TONS of great products out there that do profile management, AppSense, Citrix, Liquidware Labs, RES, VMware, etc. My recommendation is to read the UEM Smackdown by Ruben Spruijt. It's an amazing paper and it will educate you tremendously on the current profile management softwares. Re-direct your users profiles to a CIFS share, set a backup and storage policy, sit back and relax! If you haven't used NetApp q-trees before, they're great for keeping users from eating too much storage. And we know users NEVER consume too much storage! To help keep storage consumption down, turn on deduplication and compression!

Which leaves us with PvDisk. I'm not sure what part of the brain this is, but I know it's cool! Like the write cache, put it on a shared NFS datastore or storage repository, set thin provisioning, deduplication, get a drink and put your feet up! Since your users will be installing applications here, don't use compression, it will put extra load on your storage. Keep an eye this since user's will be installing applications and probably putting their files there too. It can fill up very fast!

See you in Part II

Welcome to a three part series regarding Citrix Streamed Desktops with Personal vDisk and NetApp best practices. Part I will consist of storage design considerations and best practices when creating steamed desktops with PvDisk. Part II will jump to best practices on backing up your new environment and Part III will discuss recovering individual files to a site failure.

From my previous blog post, you can tell I really like technologies like PvDisk, I feel they are what were missing in VDI implementations for folks that needed more persistency, but great VDI products are just one side of the coin. To make your new environment even better you need a good architecture and great storage. We all know that user's are a tricky bunch, if they feel their new environment is ANY slower or more problematic than what they currently have, they will complain and make your life very difficult. I can't promise your new VDI environment will be perfect, but I'll provide you with some of our latest storage best practices to help make it that much better!

Let's talk vDisk. In PVS, this is the long term memory of your VDI implementation. Everything your gold image is will be contained on your vDisk. Until recently NetApp recommended putting your vDisk on block storage, but with our support of SMB 2.1 we recommend putting it on a CIFS share. I've written about this in a previous blog so I won't spend much time on it. Go CIFS!

Write Cache is the short term memory of your VM. Anything the OS needs to do while it's running is stored on the write cache. We recommend putting the write cache on NFS and thin provision it. If you think about it, probably one of the largest consumers of storage will be your write cache. If you assign 10 gigs to each virtual machine, and multiply that times the number of virtual machines in your environment, you can see how quickly this can become a huge number! Thus the beauty of NFS and thin provisioning. Assign all of your desktops to the same write cache datastore or storage repository and they will only use what they need out of the shared bucket. Since it's all transient data, don't waste CPU cycles on deduplication or compression.

Hmmm, if vDisk is long term memory and Write Cache is short term, I guess your user profile will be your gray matter. :-) This gives your desktop it's personality. There are TONS of great products out there that do profile management, AppSense, Citrix, Liquidware Labs, RES, VMware, etc. My recommendation is to read the UEM Smackdown by Ruben Spruijt. It's an amazing paper and it will educate you tremendously on the current profile management softwares. Re-direct your users profiles to a CIFS share, set a backup and storage policy, sit back and relax! If you haven't used NetApp q-trees before, they're great for keeping users from eating too much storage. And we know users NEVER consume too much storage! To help keep storage consumption down, turn on deduplication and compression!

Which leaves us with PvDisk. I'm not sure what part of the brain this is, but I know it's cool! Like the write cache, put it on a shared NFS datastore or storage repository, set thin provisioning, deduplication, get a drink and put your feet up! Since your users will be installing applications here, don't use compression, it will put extra load on your storage. Keep an eye this since user's will be installing applications and probably putting their files there too. It can fill up very fast!

|

| Your Brain on Citrix Streamed Desktops with Personal vDisk |

See you in Part II

Tuesday, November 6, 2012

Page Hits

It's pretty funny. The picture of my wife's teddy bear in a NetApp outfit has the 2nd highest hit ratio. :-)

Monday, November 5, 2012

Why I LOVE PvDisk!

Hi All,

In today's topic I'd like to discuss why I love technologies like Citrix PvDisk. PvDisk is a new product from Citrix that I think REALLY raises the bar when it comes to VDI. For the most part non-persistent desktops are pretty easy to maintain. You create your master image, roll it out and users aren't allowed to change it. When you need to update the master image with patches or virus definitions, you just do it and all of the clients get the new master image. This is great for call centers, libraries, etc, but what about folks like myself who want to install stuff on their my laptop?

Non-persistent is great for patching, and keeping the desktops in sync, but not so good for customizations. Persistent is great for customizations, but not so good when it comes to keeping things in sync. Users have a nasty habit of installing what I call, "company tolerated", applications. For example, iTunes. It's not going to cause any harm, but will IT make it a standard application? Probably not. This is where PvDisk comes to the rescue!

With PvDisk you get a drive all to yourself, it's yours, all yours!!! The VDI administrators can make it any size they desire and it will survive in a non-persistent environment. How cool is that!?! So while my C:\ drive is updated with all the latest patches, virus definitions, etc., my P:\ drive remains consistent even after refreshes. So I can install my company tolerated applications and still have a non-persistent desktop. Best of both worlds!

So where does NetApp fit into all of this? I did some testing with NetApp deduplication and compression and they still work on the P:\ drive! What could be better? If you're installing applications, I don't recommend using compression on your P:\ drive though. So your users can install their apps on their P:\ drive and store files in their CIFS shares. Happy administrators and happy users!

Until Next Time!

In today's topic I'd like to discuss why I love technologies like Citrix PvDisk. PvDisk is a new product from Citrix that I think REALLY raises the bar when it comes to VDI. For the most part non-persistent desktops are pretty easy to maintain. You create your master image, roll it out and users aren't allowed to change it. When you need to update the master image with patches or virus definitions, you just do it and all of the clients get the new master image. This is great for call centers, libraries, etc, but what about folks like myself who want to install stuff on their my laptop?

Non-persistent is great for patching, and keeping the desktops in sync, but not so good for customizations. Persistent is great for customizations, but not so good when it comes to keeping things in sync. Users have a nasty habit of installing what I call, "company tolerated", applications. For example, iTunes. It's not going to cause any harm, but will IT make it a standard application? Probably not. This is where PvDisk comes to the rescue!

With PvDisk you get a drive all to yourself, it's yours, all yours!!! The VDI administrators can make it any size they desire and it will survive in a non-persistent environment. How cool is that!?! So while my C:\ drive is updated with all the latest patches, virus definitions, etc., my P:\ drive remains consistent even after refreshes. So I can install my company tolerated applications and still have a non-persistent desktop. Best of both worlds!

So where does NetApp fit into all of this? I did some testing with NetApp deduplication and compression and they still work on the P:\ drive! What could be better? If you're installing applications, I don't recommend using compression on your P:\ drive though. So your users can install their apps on their P:\ drive and store files in their CIFS shares. Happy administrators and happy users!

Until Next Time!

Wednesday, October 31, 2012

Tuesday, October 30, 2012

vDisk + CIFS + ONTAP = :-)

Hi Everybody,

Something I'd really like to share. In ONTAP 8.1.1 for 7 and Cluster-Mode we now support SMB 2.1! For PVS folks this is huge news because we can put our vDisk images on CIFS shares now. We've been testing this and all I want to say is, where has this been all my life?! No more block storage for vDisk! I have nothing against block and think it's a great protocol, but this is sooooo easy.

If I've got multiple PVS servers, I no longer need multiple vDisk images and have to worry about updating all of them. I only have to update one image!. If you choose to store your vDisk on block, we've got a great procedure using our FlexClones so you only have to update one vDisk image. I'll include the link later on. This also eliminates the need for clustered filesystems to share out the vDisk image. You can now use CIFS that's built right into the controller! Remember to make sure you have a version of PVS that will support this.

Dan Allen has a great blog on this regarding why this works and does a much better job than I could do. Take a look.

Here's the link to the article if you want to use FlexClones to clone out your vDisk on block storage.

TR-3795.

All for now.

Neil

Something I'd really like to share. In ONTAP 8.1.1 for 7 and Cluster-Mode we now support SMB 2.1! For PVS folks this is huge news because we can put our vDisk images on CIFS shares now. We've been testing this and all I want to say is, where has this been all my life?! No more block storage for vDisk! I have nothing against block and think it's a great protocol, but this is sooooo easy.

If I've got multiple PVS servers, I no longer need multiple vDisk images and have to worry about updating all of them. I only have to update one image!. If you choose to store your vDisk on block, we've got a great procedure using our FlexClones so you only have to update one vDisk image. I'll include the link later on. This also eliminates the need for clustered filesystems to share out the vDisk image. You can now use CIFS that's built right into the controller! Remember to make sure you have a version of PVS that will support this.

Dan Allen has a great blog on this regarding why this works and does a much better job than I could do. Take a look.

Here's the link to the article if you want to use FlexClones to clone out your vDisk on block storage.

TR-3795.

All for now.

Neil

Monday, October 29, 2012

Virtualizing XenApp Using Citrix PVS or NetApp VSC

Hi Everyone,

This blog is WAAAAAAAAAY over due. I had this paper published in January and here it is October. The cool thing is it's still relevant. Well, I think it is anyway....

A growing trend in the XenApp world is to virtualize your XenApp servers. It's easier to manage, less hardware, and it melds very nicely with your current virtualized Citrix environment. XenApp is a very flexible tool and allows you to do MANY things in MANY different ways. In my paper I discuss launching an application from a Citrix streaming profile using a NetApp CIFS share to store the profile.

If you have plans to virtualize your XenApp servers, you'll probably want to use a product like Citrix PVS or NetApp Virtual Storage Console, VSC, because it will keep the images from changing. Remember, you've got users connecting to these things and the last thing you want is for them to be different if they connect from one to another. Some applications don't take very kindly to that. What's even cooler is you have one master image and all of the other images are read only, this makes for really easy OS updates!

In the paper I chop up the storage to reflect 1/2 using PVS and 1/2 using VSC, in the real world, you would really only do one method. I was just showing both methods to be thorough. Both are great products, but today I'm going to focus on VSC. If you'd like to try this architecture, you'll need vSphere for your hypervisor and VSC installed as a plugin.

I know what you're thinking, "But Neil, I thought VSC clones were full read/write clones, how are they helpful here?" Ah, good question! It is true that the clones created by VSC are full clones, but I'm using the Redeploy feature available to keep things in sync. If you haven't tried Redeploy yet, it's a very cool feature. Clones created from a parent can be redeployed from that parent and will retain their MAC addresses so when they come back your applications won't freak. The product works in conjunction with the Customization Specification tool built into vCenter. (Another very cool tool!) This way machines will be given their unique names and set everything correctly in Active Directory. When the servers come out of the redeploy, they have any new patches or OS changes from the parent and will be ready to stream out your XenApp application.

There's so much more I could tell you, but I wanted to share the NetApp VSC and Redeploy capability in this architecture. If you've got any questions or comments, please feel free to write one!

Thanks for your time.

Neil

Thursday, October 25, 2012

Why Enterprise Storage for Writecache?

Hi Everyone,

I'm often asked, "Why should I put my writecache on your storage? It's only transient data." While I was somewhere over the Atlantic I had a thought (yes, yes, occasionally I do get them.) regarding RPO and RTO. For those of you who don't know, these are terms used in disaster recover and backup recovery. RPO is the Recovery Point Objective and RTO is Recovery Time Objective. Basically, how fast and at what point in your data do you want to recover from. So what does any of this have to do with the writecache? Good question!

Our best practice states you should put the writecache on disk so you don't overwhelm your PVS server and for lots of other good reasons. Yeah, yeah, I know there will be tons of people saying, "Just put the writecache on the server." but that just raises a whole new set of problems. Even though that disk is just transient data, it's still an integral part of the OS, so I asked my performance engineer to try a test! He had 100 desktops running LoginVSI and he pulled the writecache datastore. Things were not pretty after that. The desktops hung and were even grayed out in vCenter. vCenter still functioned, but it was slow and clearly did not like we had just done.

So imagine your writecache is on a less expensive storage that has no fault tolerance. Will user data be lost? Probably not, but your users will be stuck until you can get something online again. Hence RTO. We're always so concerened about protecting data, that I think we forget about protecting the infrastructure too. Think of this as the public transit of VDI. Writecache won't make you the new super start up, but it will get you to work so you can focus on becoming that super start up.

Hey, for more information on NetApp and PVS take a look here:

NetApp and PVS

Its a great paper written by Rachel Zhu and myself.

I'm often asked, "Why should I put my writecache on your storage? It's only transient data." While I was somewhere over the Atlantic I had a thought (yes, yes, occasionally I do get them.) regarding RPO and RTO. For those of you who don't know, these are terms used in disaster recover and backup recovery. RPO is the Recovery Point Objective and RTO is Recovery Time Objective. Basically, how fast and at what point in your data do you want to recover from. So what does any of this have to do with the writecache? Good question!

Our best practice states you should put the writecache on disk so you don't overwhelm your PVS server and for lots of other good reasons. Yeah, yeah, I know there will be tons of people saying, "Just put the writecache on the server." but that just raises a whole new set of problems. Even though that disk is just transient data, it's still an integral part of the OS, so I asked my performance engineer to try a test! He had 100 desktops running LoginVSI and he pulled the writecache datastore. Things were not pretty after that. The desktops hung and were even grayed out in vCenter. vCenter still functioned, but it was slow and clearly did not like we had just done.

So imagine your writecache is on a less expensive storage that has no fault tolerance. Will user data be lost? Probably not, but your users will be stuck until you can get something online again. Hence RTO. We're always so concerened about protecting data, that I think we forget about protecting the infrastructure too. Think of this as the public transit of VDI. Writecache won't make you the new super start up, but it will get you to work so you can focus on becoming that super start up.

Hey, for more information on NetApp and PVS take a look here:

NetApp and PVS

Its a great paper written by Rachel Zhu and myself.

Wednesday, October 24, 2012

Hello World

Hi and welcome to my Blog. My name is Neil Glick and I'm a Virtualization Architect for NetApp. I focus primarily on storage and Citrix technologies, but from time to time I'll throw in some other things. I hope you enjoy!

I just got back from Citrix Synergy in Barcelona. Here's a picture of me and our Flexpod. Don't we look great together? The event was great, but I got a cold.

While there I taught a course with Citrix and Cisco with my mentor Rachel Zhu. Our portion of the class focused on Virtual Storage Console for XenServer, NetApp System Manager and OnCommand Insight Balance.

VSC for XenServer is a free **(Edit 02/07/2013 - Regarding VSC for both VMware and XenServer - They are free to download, but do require specific licenses for some features.)** XenServer plugin created by NetApp to enable provisioning of storage, creation of virtual machines and much more from within XenServer! please see this link for more information:

VSC for XenServer

OnCommand Insight Balance is an awesome reporting application from NetApp that can help zero in on your virtualization environment and find problems before they become problems! Here's a YouTube video I did with Mike Laverick.

Balance

That's all for now!

I just got back from Citrix Synergy in Barcelona. Here's a picture of me and our Flexpod. Don't we look great together? The event was great, but I got a cold.

While there I taught a course with Citrix and Cisco with my mentor Rachel Zhu. Our portion of the class focused on Virtual Storage Console for XenServer, NetApp System Manager and OnCommand Insight Balance.

VSC for XenServer is a free **(Edit 02/07/2013 - Regarding VSC for both VMware and XenServer - They are free to download, but do require specific licenses for some features.)** XenServer plugin created by NetApp to enable provisioning of storage, creation of virtual machines and much more from within XenServer! please see this link for more information:

VSC for XenServer

OnCommand Insight Balance is an awesome reporting application from NetApp that can help zero in on your virtualization environment and find problems before they become problems! Here's a YouTube video I did with Mike Laverick.

Balance

That's all for now!

Subscribe to:

Comments (Atom)