Ahoy Ahoy,

Today I thought I'd do something a little different, instead of writing about a single feature in VAAI I thought I'd talk about something I struggled the most with, the on/off switch. While researching for this blog I found this great blog written by

Jason Langer! Since VAAI is made up of a bundle of APIs for SAN and now for NAS there isn't a simple way to check to make sure everything is running, but I'm going to show you a few tricks that I hope will help!

Step 1. Installing.

If you're running SAN and you're running a VAAI supported version of ESX or ESXi and your storage also supports VAAI, congratulations you've installed VAAI correctly! HUH?! Yep, for SAN it's already in there. Okay, what about NAS? Well, that's a bit more involved....

1. Go to VSC inside vCenter, and if you're not using NetApp, you should be able to get the plugin from your storage vendor. Under Monitoring and Host Configuration, select the tools link. At the bottom of the screen you'll see the NFS Plug-in for VMware VAAI. Install the plug-in on your ESXi servers you want to have NAS VAAI functionality.

2. Now lots make sure the plug-in was installed correctly. Log onto your ESXi machine and type:

# esxcli software vib list | grep NetApp

NetAppNasPlugin 1.0-018 NetApp VMwareAccepted 2013-02-21

If you don't see the plug-in, something went wrong. Remember, you need to reboot your ESXi host, but not the NetApp controller.

3. Awesome, both SAN and NAS are installed and ready to go! Well, sort of.... We now have to enable VAAI on the controller for NFS. For both 7-Mode and Clustered ONTAP, we enable VAAI at the CLI.

7-Mode:

options nfs.vstorage.enable on

cDOT:

vserver nfs modify –vserver vserver_name -vstorage enabled

4. Outstanding, now your NetApp is ready! So you're ready right?! Well, maybe.... There's a few settings that

should be ready to go, but let's double check, just in case.

5. Log into vCenter and click on one of your ESXi servers. Select Configuration and than the Advanced Settings link under Software. Now check for "DataMover" and "VMFS3". Check to make sure these three settings are set to "1". If they're not, some of the VAAI functions aren't going to work.

And I haven't forgotten about my CLI fans. If you don't want to check in the GUI, go to the CLI and type:

# esxcli system settings advanced list -o /DataMover/HardwareAcceleratedMove

# esxcli system settings advanced list -o /DataMover/HardwareAcceleratedInit

# esxcli system settings advanced list -o /VMFS3/HardwareAcceleratedLocking

Look for a value of "

Int Value: 1", that means it's enabled.

6. Okay so now we're ready right?! Well sorta... Couple more things to check. Let's make sure the storage devices are VAAI ready. Go back to your CLI window and type:

# esxcli storage core device list

This will give you a full list of all your devices attached to your ESXi box. Grab the "naa" identifier of one of your NetApp devices and type:

# esxcli storage core device vaai status get -d naa.60a9800032466635635d414c45554356

naa.60a9800032466635635d414c45554356

VAAI Plugin Name: VMW_VAAIP_NETAPP

ATS Status: supported

Clone Status: supported

Zero Status: supported

Delete Status: supported

How about ATS-Only?(It's a new feature I'll cover in a later blog) Type: (where vaai_iscsi2 is the name of your datastore you want to check)

# vmkfstools -Ph -v1 /vmfs/volumes/vaai_iscsi2

VMFS-5.58 file system spanning 1 partitions.

File system label (if any): vaai_iscsi2

Mode: public ATS-only

Capacity 100 GB, 99.1 GB available, file block size 1 MB

Volume Creation Time: Thu Apr 11 20:48:33 2013

Files (max/free): 130000/129992

Ptr Blocks (max/free): 64512/64496

Sub Blocks (max/free): 32000/32000

Secondary Ptr Blocks (max/free): 256/256

File Blocks (overcommit/used/overcommit %): 0/971/0

Ptr Blocks (overcommit/used/overcommit %): 0/16/0

Sub Blocks (overcommit/used/overcommit %): 0/0/0

UUID: 516721a1-dd91425c-ee36-00c0dd1bcac4

Partitions spanned (on "lvm"):

naa.60a9800032466635635d414c45554356:1

Is Native Snapshot Capable: YES

OBJLIB-LIB : ObjLib cleanup done.

Excellent, looking great! Functionality is listed as supported, ATS-Only is set, let's just check one more thing inside the GUI and away we'll go!

7. Log into vCenter and click on an ESXi server and select Configuration > Storage Adapters. Select the storage you want to check, in this case iSCSI, and look at the bottom of the screen. As shown here Hardware Acceleration is listed as "Supported".

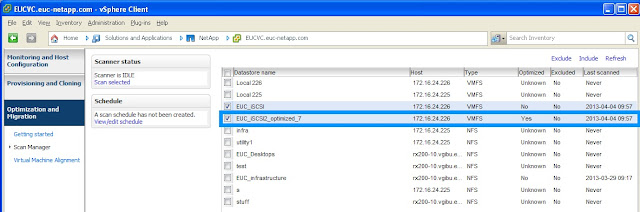

8. Now head to VSC in vCenter and under Monitoring and Host Configuration select Overview. Take a look at the VAAI Capable column.

Congratulations, you're good to go! Remember to check the functions of your storage, because some of the VAAI functions might not be enabled for your storage vendor. In another blog I'll show you how to use ESXTOP so you can see that VAAI is actually working. :-)

Enjoy!

-Brain